- AI Platforms & Assistants

- OpenAI

- ChatGPT

Holding a magnifying glass up to AI perceptions

Comments (0) ()When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works.

(Image credit: Shutterstock)

(Image credit: Shutterstock)

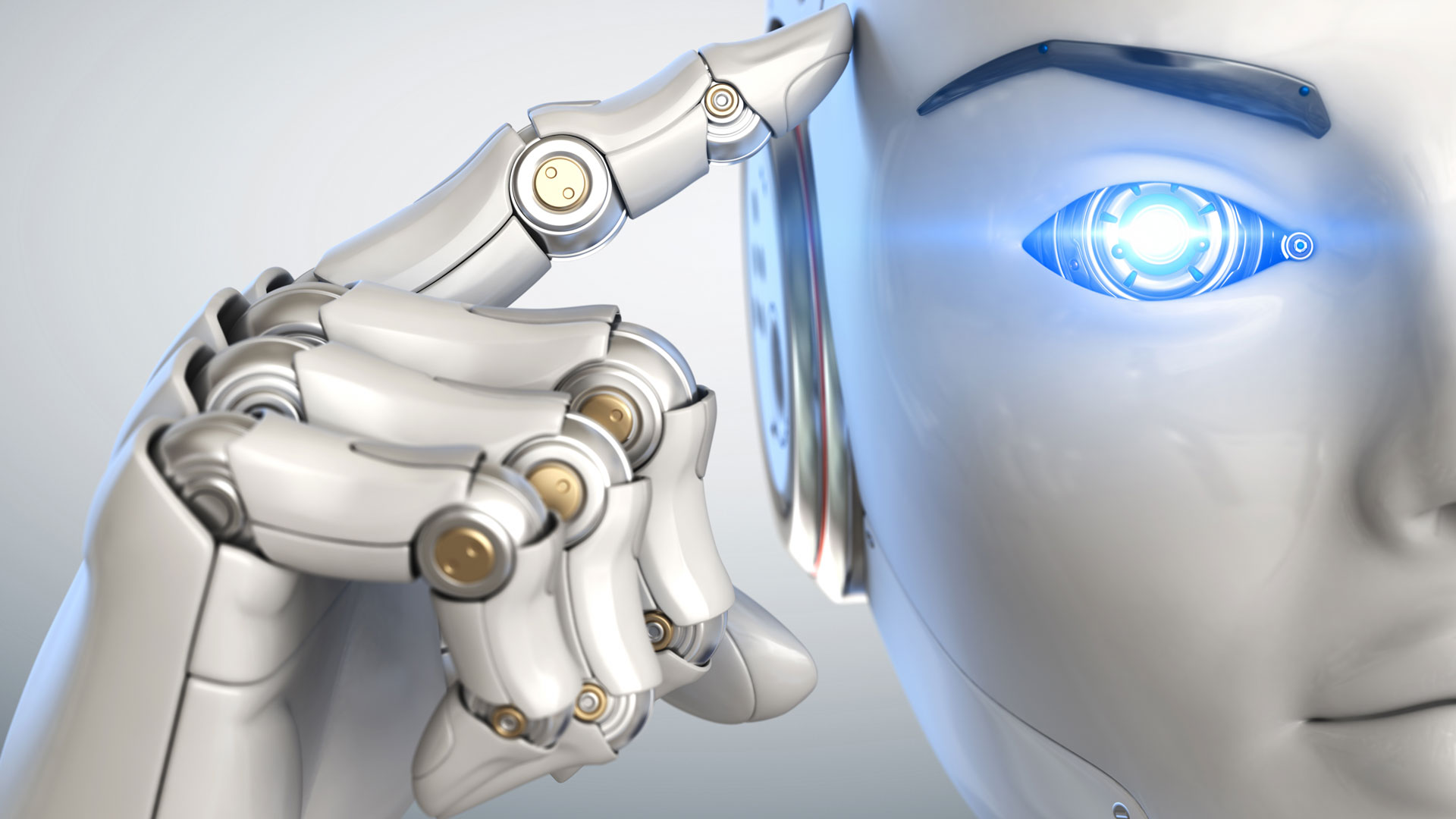

Every new AI model insists that it is the greatest AI model ever in every way you can imagine. Obviously, that can't be true, but how well they each perform at different tasks and roles isn't always clear, and even supposedly neutral, quantitative tests might not accurately convey what they feel like for the average user.

One particular example is multimodal decryption – looking at an image and deciphering what's in it and what it might mean. It's something humans do instantly and instinctively, but AI models are newer to the role. Getting an AI model to accurately interpret a chaotic image might matter more than you would think at first. If an AI model can identify objects, it could help you catalog belongings for insurance, identify hazards in a home, or even decipher a transit map. An AI model that can make sense of complex, layered visual information without inventing details is incredibly useful.

You may like-

I tested Gemini 3 and ChatGPT 5.1 head-to-head on what really matters

I tested Gemini 3 and ChatGPT 5.1 head-to-head on what really matters

-

I tested Gemini 3, ChatGPT 5.1, and Claude Sonnet 4.5 – and Gemini crushed it in a real coding task

I tested Gemini 3, ChatGPT 5.1, and Claude Sonnet 4.5 – and Gemini crushed it in a real coding task

-

Forget the robots – this is the reason AI is the best thing to happen since smartphone cameras

Forget the robots – this is the reason AI is the best thing to happen since smartphone cameras

Times Square

Times Square is a sensory overload. If a multimodal model can correctly parse Times Square, it can parse pretty much anywhere.

ChatGPT 5.1 greets Times Square in a structured way, splitting it up into different sections. It identified the major signs for Wicked, Phantom, Jersey Boys, Aldo, and Express, It sees the hot dog cart, the yellow cabs, the buses, the people crossing the street, and the street markings without dramatizing anything. It quoted bits of text visible in the signs, including smaller phrases like “Tonight belongs to…” under Phantom of the Opera. ChatGPT 5.1 was almost chatty, saying, “This looks like peak evening energy, everything is competing for attention."

Gemini 3 Pro approaches the Times Square image like a forensic analyst. It doesn’t just list what’s present; it describes spatial relationships, angles, and color composition. For example, it notes how the green glow from the Wicked sign is “reflecting across the adjacent building surfaces,” something neither of the others bothered to mention. It calls out the crosswalk pattern as “wide, staggered diagonal lines indicating heavy pedestrian flow” and identifies the bus as an MTA vehicle without embellishment.

Get daily insight, inspiration and deals in your inboxContact me with news and offers from other Future brandsReceive email from us on behalf of our trusted partners or sponsorsBy submitting your information you agree to the Terms & Conditions and Privacy Policy and are aged 16 or over.Its text recognition is excellent. It doesn’t misread partial signs and avoids the temptation to guess. When something is not readable, it simply says, “The text is present but illegible due to angle and resolution.” This restraint is exactly what you want in a multimodal model.

Claude is the model most likely to turn Times Square into literature. It described the scene as "a vibrant nighttime photograph of Times Square in New York City, capturing the iconic energy and spectacle of the area." When it sticks to plain description, Claude is sharp. It identifies the major signs and their colors, and gets many of the visual details right, including shadows, reflections, and the density of foot traffic.

Renaissance painting

Michelangelo’s Last Judgment is the visual equivalent of handing a model a thousand-piece puzzle. There are dozens of figures, complex poses, overlapping limbs, subtle symbolic moments, with a vast amount of narrative density. This image tests fine-grained figure identification, spatial reasoning, and recognition of artistic intention.

You may like-

I tested Gemini 3 and ChatGPT 5.1 head-to-head on what really matters

I tested Gemini 3 and ChatGPT 5.1 head-to-head on what really matters

-

I tested Gemini 3, ChatGPT 5.1, and Claude Sonnet 4.5 – and Gemini crushed it in a real coding task

I tested Gemini 3, ChatGPT 5.1, and Claude Sonnet 4.5 – and Gemini crushed it in a real coding task

-

Forget the robots – this is the reason AI is the best thing to happen since smartphone cameras

Forget the robots – this is the reason AI is the best thing to happen since smartphone cameras

ChatGPT 5.1 approaches the painting with academic clarity. It identifies “a central Christ figure surrounded by a swirling mass of human forms,” notes the separation of blessed and damned figures, and describes distinct clusters such as angels blowing trumpets, resurrected bodies rising from the earth, and demons hauling souls downward. Crucially, it does not hallucinate specific identities. It refers to “figures in the lower left being raised from graves” or “angels carrying symbols of the Passion,” avoiding the temptation to name characters with false confidence.

Gemini 3 Pro gives me the closest thing to a real art historian’s breakdown. It doesn’t just identify clusters of figures; it identifies the structural geometry: “a radial composition centered on a dynamic Christ figure with surrounding bodies arranged in concentric arcs.” It notes the directionality of motion, the tension of Michelangelo’s musculature, and even the subtle shading differences in various clouds.

It offers grounded specifics, such as “The lower right quadrant contains figures being pulled by demons toward a darker boundary area,” and it refrains from guessing identities unless they are canonical and widely recognized. It reads the emotional expressions of the figures without drifting into melodrama: “Many figures display anguish, awe, or supplication through exaggerated gesture and tension.”

Claude made sure to point out the controversy of the nudity in the painting before waxing lyrical on the painting as a whole as "a magnificent vortex of bodies spiraling through divine judgment.” It immediately identifies Christ in the center, Mary beside him, and the upward sweep of saved figures versus the downward turmoil of the damned. Otherwise, it was relatively brisk compared to Times Square, simply going through each section and giving a detailed list of the figures and how to tell them apart.

Messy room

Figuring out what's in a chaotic room is a deceptively hard task. Different surfaces, piles, tangled cables, overlapping papers, and so much more in a small space. If an AI can work out what's here, it should be able to solve all sorts of domestic problems.

ChatGPT 5.1 took an inventory, starting with identifying the general layout of the room. It saw the tangled cords, the documents, the plastic storage bins, and the piles of paper. Then it simply started listing things from left to right: “The left table contains a large number of items, including cables, binders, manuals, and small electronic devices.” It identifies the green crates under the right table and the blue binders stacked on top. It was mostly accurate, although it occasionally vaguely labeled things as “a small device” when another model might attempt a more specific guess.

Gemini 3 Pro went for an ultra-precise list, breaking down every detail from the materials to the colors to the shapes and even possible functions of devices. It described the window lighting, the shadows on the floor, and the size of the stacks of paper. It even pointed out the old patterned carpet partially peeking from under a pile, a detail neither ChatGPT nor Claude mentioned. Unlike the others, Gemini attempted subtle deductions without overcommitting. "The assortment of binders and scattered forms suggests the space is used for administrative or organizational work,” it said.

Claude reacted to the room by dryly understating that it "appears to be in a state of disorganization." It went through the room organizing the list by type, with furniture, and what's on it, the floor, and what's on it, and so on. It identified many correct objects, such as the binders, crates, wires, plants, bags, and papers. But it also occasionally inferred things that are not visible, such as describing “a stack of envelopes” that is really just a pile of printed sheets, or calling a folded tarp “a garment bag.”

Conclusion

Each model performed reasonably well. I felt that ChatGPT 5.1 was careful and reassuringly accurate in most cases, but it tended to drift off topic after a long list and it occasionally overconfidently labels a partially seen object.

Claude Opus 4.5 had some amusing descriptions and could be imaginative while staying accurate in most cases, but sometimes its interpretations were a tad too creative. When you need strict precision, especially in chaotic scenes, its artistic impulses can get in the way.

Gemini 3 Pro is the model that consistently sees most clearly. It excels at distinguishing overlapping objects, at avoiding hallucination, at reading text accurately, and at contextualizing scenes. It describes visual relationships, lighting, composition, and texture in a way that the others don’t. It feels most like a genuine multimodal perception system rather than a text model reacting to pixels. So, while any of the three would be fine to use in most cases, I'm going to recommend Gemini 3 Pro if what you really look for in an AI model is the ability to really look for what's happening in any images you share.

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

The best business laptops for all budgetsOur top picks, based on real-world testing and comparisons

The best business laptops for all budgetsOur top picks, based on real-world testing and comparisons➡️ Read our full guide to the best business laptops1. Best overall:Dell Precision 56902. Best on a budget:Acer Aspire 53. Best MacBook:Apple MacBook Pro 14-inch (M4)

TOPICS AI CATEGORIES Gemini ChatGPT Claude OpenAI Eric Hal SchwartzSocial Links NavigationContributor

Eric Hal SchwartzSocial Links NavigationContributorEric Hal Schwartz is a freelance writer for TechRadar with more than 15 years of experience covering the intersection of the world and technology. For the last five years, he served as head writer for Voicebot.ai and was on the leading edge of reporting on generative AI and large language models. He's since become an expert on the products of generative AI models, such as OpenAI’s ChatGPT, Anthropic’s Claude, Google Gemini, and every other synthetic media tool. His experience runs the gamut of media, including print, digital, broadcast, and live events. Now, he's continuing to tell the stories people want and need to hear about the rapidly evolving AI space and its impact on their lives. Eric is based in New York City.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.

Logout Read more I tested Gemini 3 and ChatGPT 5.1 head-to-head on what really matters

I tested Gemini 3 and ChatGPT 5.1 head-to-head on what really matters

I tested Gemini 3, ChatGPT 5.1, and Claude Sonnet 4.5 – and Gemini crushed it in a real coding task

I tested Gemini 3, ChatGPT 5.1, and Claude Sonnet 4.5 – and Gemini crushed it in a real coding task

Forget the robots – this is the reason AI is the best thing to happen since smartphone cameras

Forget the robots – this is the reason AI is the best thing to happen since smartphone cameras

Should you use Google AI Mode or is boring old Search better?

Should you use Google AI Mode or is boring old Search better?

Thinking of taking a break from ChatGPT? Google Gemini is ready to impress

Thinking of taking a break from ChatGPT? Google Gemini is ready to impress

ChatGPT vs Gemini vs Perplexity: Which AI is your smartest shopper?

Latest in AI Platforms & Assistants

ChatGPT vs Gemini vs Perplexity: Which AI is your smartest shopper?

Latest in AI Platforms & Assistants

Epic Games' CEO calls AI labels for games on Steam meaningless

Epic Games' CEO calls AI labels for games on Steam meaningless

ChatGPT had some issues earlier, but it’s back — here’s what you need to know

ChatGPT had some issues earlier, but it’s back — here’s what you need to know

Sam Altman calls a ‘code red’ for ChatGPT – here’s what it means

Sam Altman calls a ‘code red’ for ChatGPT – here’s what it means

DeepSeek just gave away an AI model that rivals GPT-5

DeepSeek just gave away an AI model that rivals GPT-5

James Cameron declares war on ‘horrifying’ AI actors

James Cameron declares war on ‘horrifying’ AI actors

Brace yourself, ChatGPT fans – your conversations could get ads soon

Latest in Features

Brace yourself, ChatGPT fans – your conversations could get ads soon

Latest in Features

Squarespace’s Cyber Week continues - grab 20% off before it’s gone

Squarespace’s Cyber Week continues - grab 20% off before it’s gone

Love lattes? I test coffee makers for a living and these are my top 3 recommendations for the creamiest drinks

Love lattes? I test coffee makers for a living and these are my top 3 recommendations for the creamiest drinks

ChatGPT vs Gemini vs Claude: Which AI has the best eye?

ChatGPT vs Gemini vs Claude: Which AI has the best eye?

Ananta developers weigh in on GTA 6 comparisons – ‘there are still very many key differences between us’

Ananta developers weigh in on GTA 6 comparisons – ‘there are still very many key differences between us’

You can build a website for just $1 with IONOS right now - but this deal won’t be around for long

You can build a website for just $1 with IONOS right now - but this deal won’t be around for long

The next Android 16 update has landed – here are the 7 biggest features

LATEST ARTICLES

The next Android 16 update has landed – here are the 7 biggest features

LATEST ARTICLES- 1AMD's GPU prices may increase soon, so it's time to focus on holiday sales

- 2Love lattes? I test coffee makers for a living and these are my top 3 recommendations for the creamiest drinks

- 3Squarespace’s Cyber Week continues - grab 20% off before it’s gone

- 4Zettlab D6 NAS review

- 5Microsoft has broken dark mode in File Explorer with its latest update